NESP

Neural Estimation of Spacecraft Pose.

|

Funded by Service public de Wallonie (SPW). |

|

Funded by Aerospacelab. |

Neural Estimation of Spacecraft Pose.

|

Funded by Service public de Wallonie (SPW). |

|

Funded by Aerospacelab. |

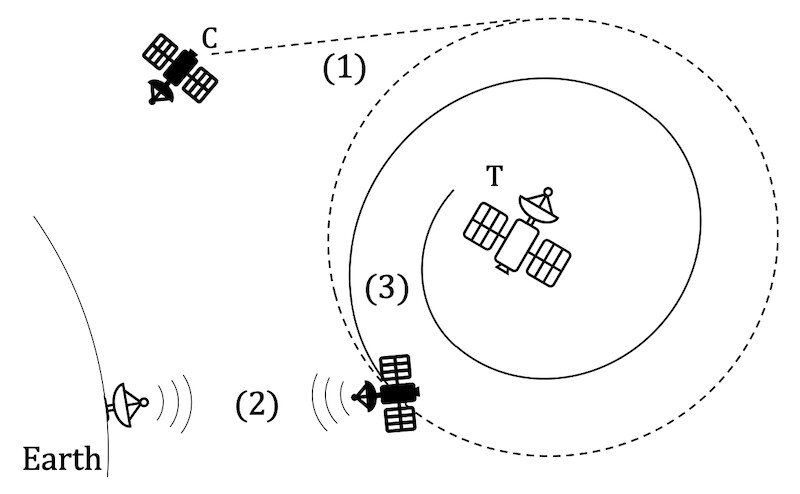

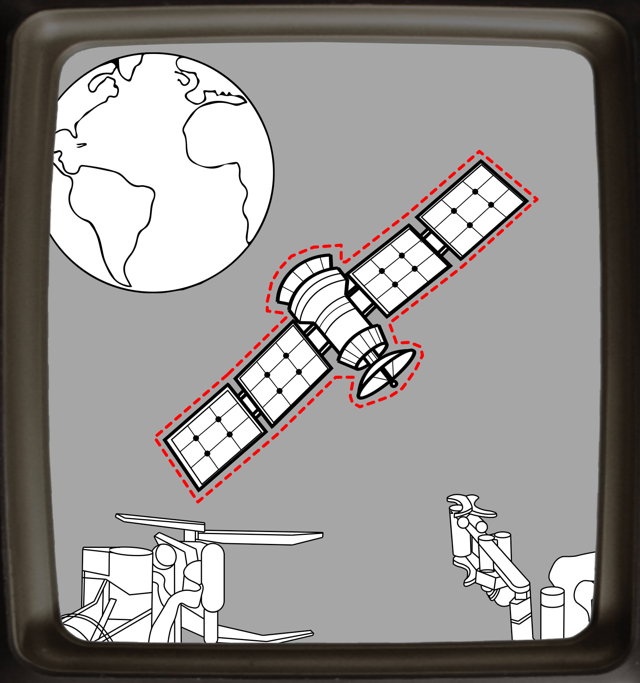

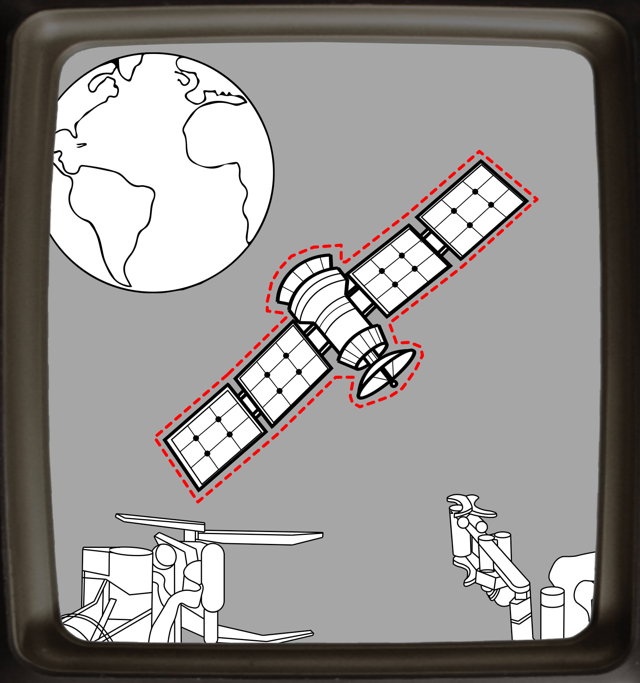

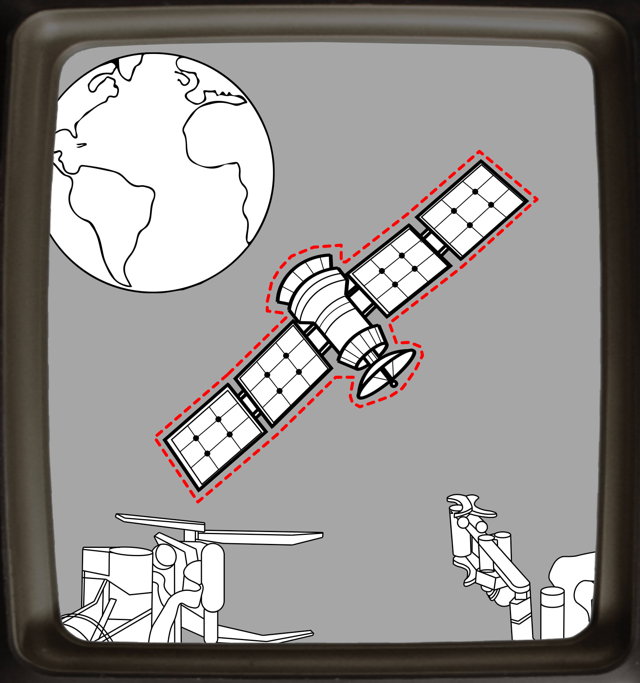

This project focuses on the critical task of 6D pose estimation for spacecraft, a fundamental capability for autonomous rendezvous and proximity operations in space, such as on-orbit servicing and debris removal. The challenge is particularly acute when relying solely on monocular cameras due to cost, mass, and power constraints associated with Lidars or depth cameras in space. A significant hurdle lies in the distinct orbital lighting conditions and the difficulty of acquiring extensive real-world image datasets. To overcome these limitations, the research explores novel deep-learning based solutions that leverage synthetic data for training, while addressing the inherent domain gap between synthetic and real-world images [1], [2].

One key area of investigation involves enhancing the generalization abilities of pose estimation networks to unseen, real-world orbital conditions. This is achieved through a multi-faceted approach. A novel, end-to-end neural architecture is proposed, which incorporates intermediate keypoint detection to decouple image processing from pose estimation, leading to improved performance and faster processing [1], [2]. Furthermore, the project introduces a learning strategy that employs aggressive data augmentation policies and multi-task learning. This strategy encourages the network to learn domain-invariant features, thereby bridging the domain gap without requiring prior knowledge of the target domain, a significant advantage over domain adaptation techniques [2].

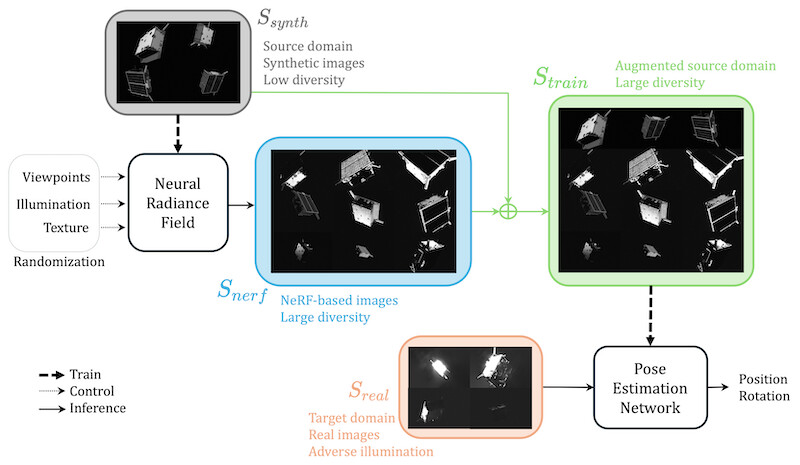

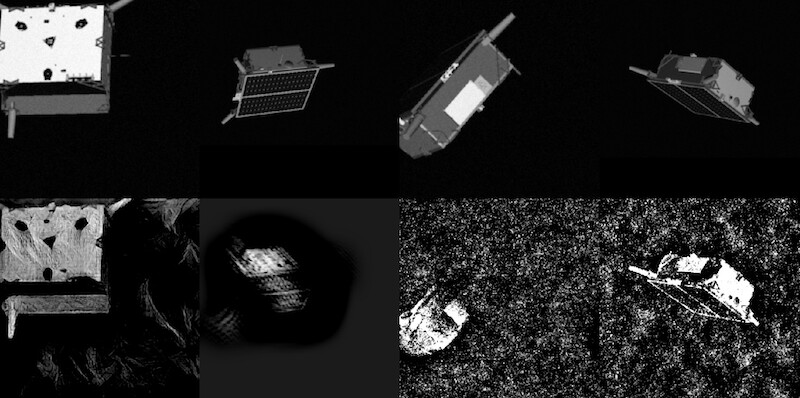

To further improve domain generalization, the project explores the use of Neural Radiance Fields (NeRFs) for advanced image synthesis. A novel augmentation method leverages a NeRF, trained on synthetic images, to generate an augmented dataset. This expanded dataset features a wider variety of viewpoints, richer illumination conditions through appearance extrapolation, and randomized textures. This NeRF-based augmentation significantly increases the diversity of the training data, leading to a substantial improvement in the pose estimation network’s generalization capabilities, demonstrated by a 50% reduction in pose error on challenging datasets like SPEED+ [3].

A particularly innovative aspect of this work addresses the challenging scenario of estimating the 6D pose of an unknown target spacecraft, for which a CAD model is unavailable. The proposed solution employs an “in-the-wild” NeRF, trained from a sparse collection of real images of the target. This NeRF then synthesizes a large, diverse dataset in terms of viewpoint and illumination, which in turn is used to train an “off-the-shelf” pose estimation network. This groundbreaking method enables the application of existing pose estimation techniques to unknown targets, performing comparably to models trained with complete CAD models, and has been validated on hardware-in-the-loop simulations that emulate realistic orbital lighting [4].

DOI

DOI Bib

Bib PDF

PDF

arXiv

arXiv