Videos

A few videos issued from various research projects.

On this Page

A few videos issued from various research projects.

These videos are also shown within research projects, along with longer discussions of the research they illustrate.

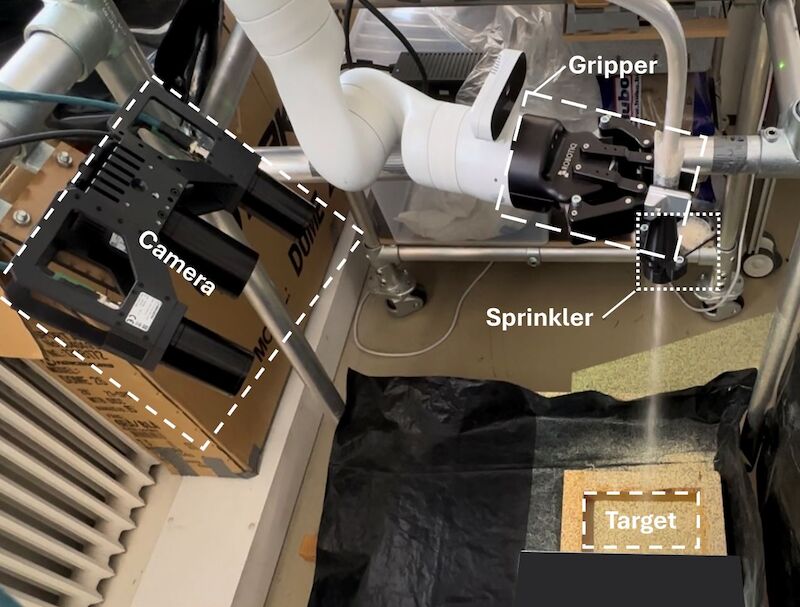

Uncertainty-aware RL with VAE State Denoising for High-occlusion Tasks (2025)

Distributing sand homogeneously on a target surface, despite major visual occlusions incurred by the sand plume. More information. Please cite [1].

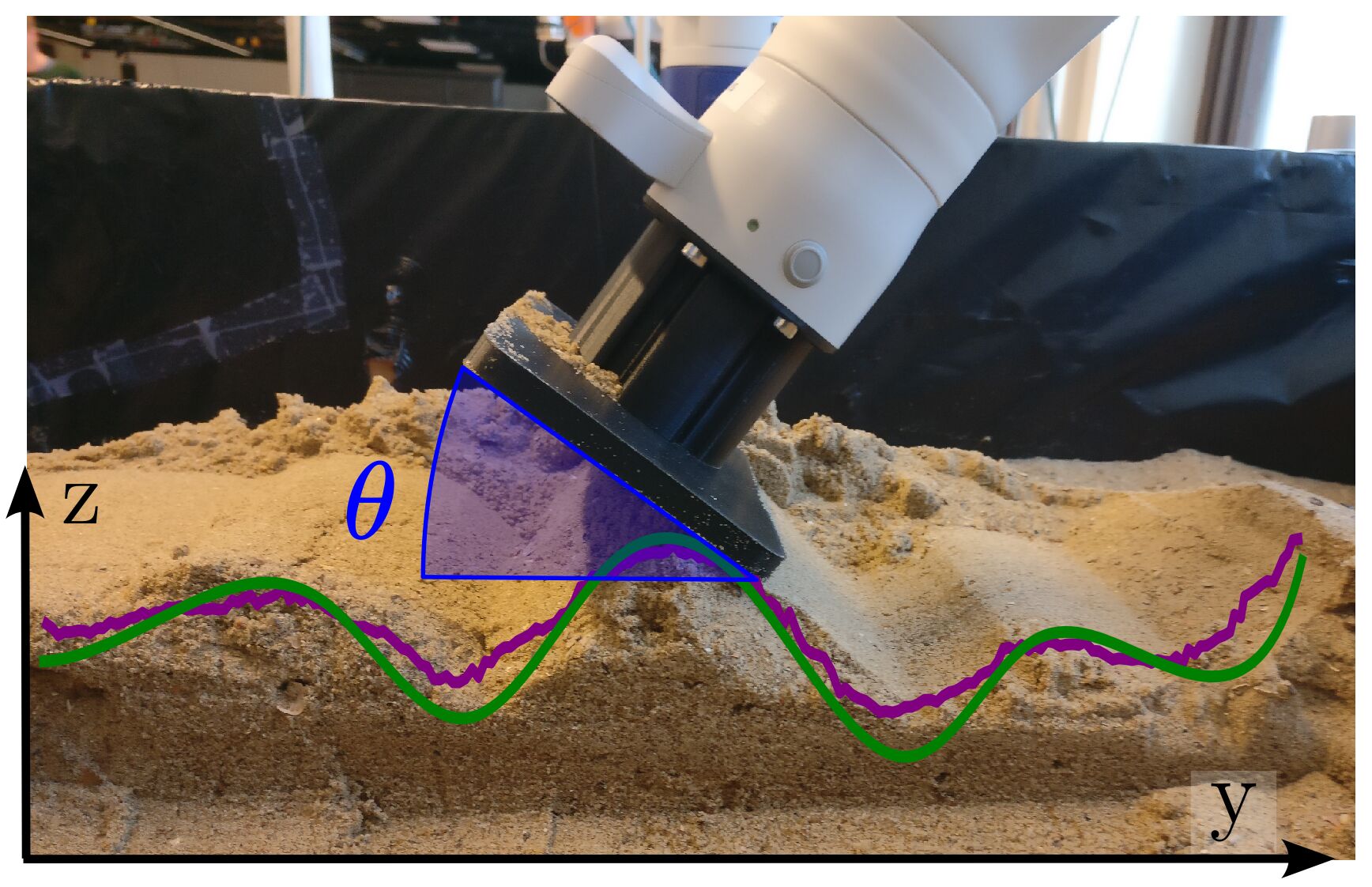

Robot Manipulation of Amorphous Materials (2025)

Autonomous sand grading. More information. Please cite [2].

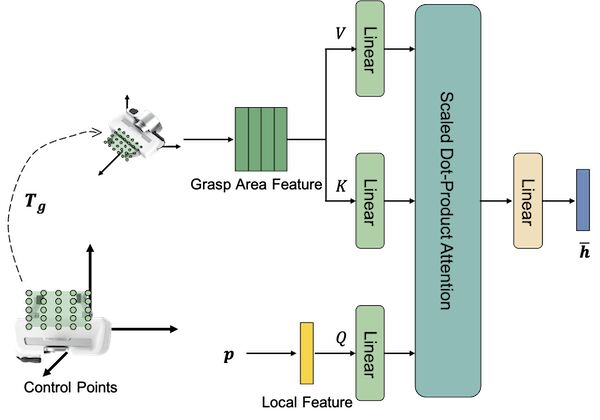

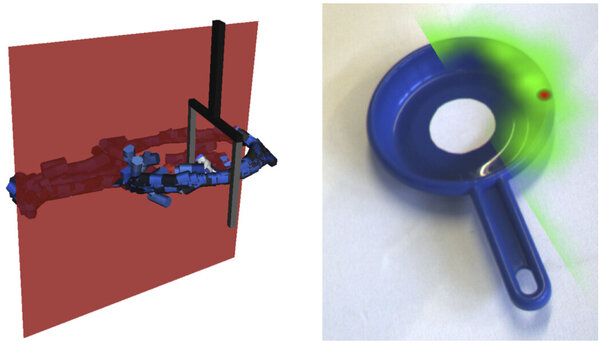

Diffusion-based Grasp Planning with Local Geometric Features

There are two dominant approaches in modern robot grasp planning: dense prediction and sampling-based methods. Dense prediction calculates viable grasps across the robot’s view but is limited to predicting one grasp per voxel. Sampling-based methods, on the other hand, encode multi-modal grasp distributions, allowing for different grasp approaches at a point. However, these methods rely on a global latent representation, which struggles to represent the entire field of view, resulting in coarse grasps. To address this, we introduce Implicit Grasp Diffusion (IGD), which combines the strengths of both methods by using implicit neural representations to extract detailed local features and sampling grasps from diffusion models conditioned on these features. More information. Please cite [3].

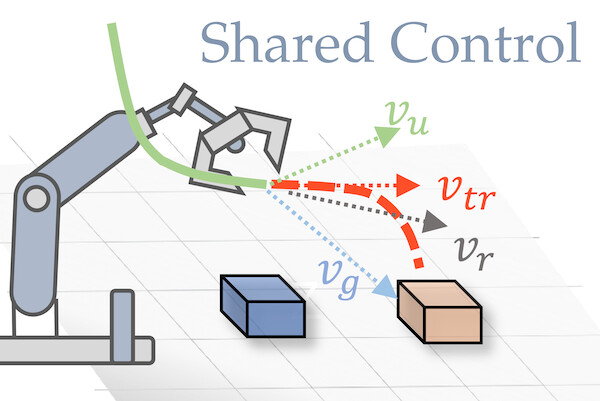

Multimodal Hand Trajectory Anticipation and Shared Control (2024)

We present an intent estimator, dubbed the Robot Trajectron (RT), that produces a probabilistic representation of the robot’s anticipated trajectory based on its recent position, velocity and acceleration history. By taking arm dynamics into account, RT can capture the operator’s intent better than other SOTA models that only use the arm’s position, making it particularly well-suited to assist in tasks where the operator’s intent is susceptible to change. We derive a novel shared-control solution that combines RT’s predictive capacity to a representation of the locations of potential reaching targets. More information. Please cite [4].

Autonomous Climbing (2019)

Beautiful video of a field test we ran in Death Valley in December 2018:

(IEEE Spectrum coverage, YouTube)

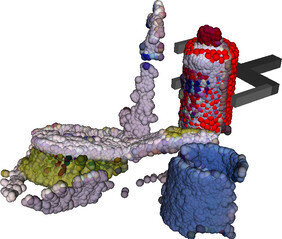

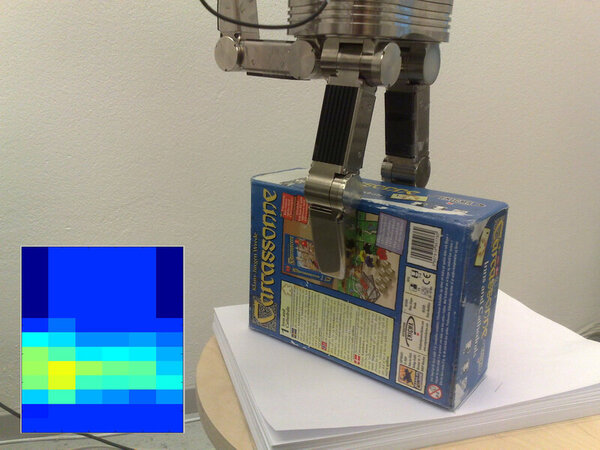

Part-based Grasp Generalization (2015)

Learning prototypical parts by which objects are often grasped, to the end of grasping novel objects. Please cite [5].

Video illustrating the capabilities of a robot that learned how to grasp via the method illustrated in the video directly below.

Video illustrating the part learning process.

Grasping Using Tactile And Visual Data (2015)

The robot learns what it feels like to grasp an object. This way, it can abort a grasp that feels unstable before lifting up (and potentially breaking) the object. Please cite [6], [7]

Video illustrating pose- and touch-based grasp stability estimation.

Grasp Densities (2010)

Learning grasp affordance models through autonomous interaction. More information. Please cite [8].

Video illustrating pose- and touch-based grasp stability estimation.

DOI

DOI Bib

Bib PDF

PDF Video

Video